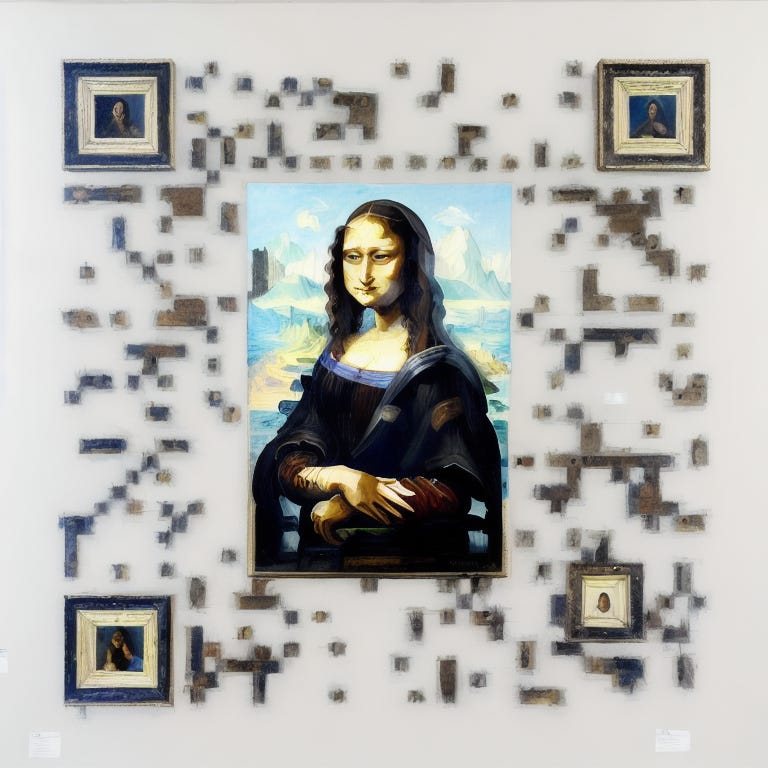

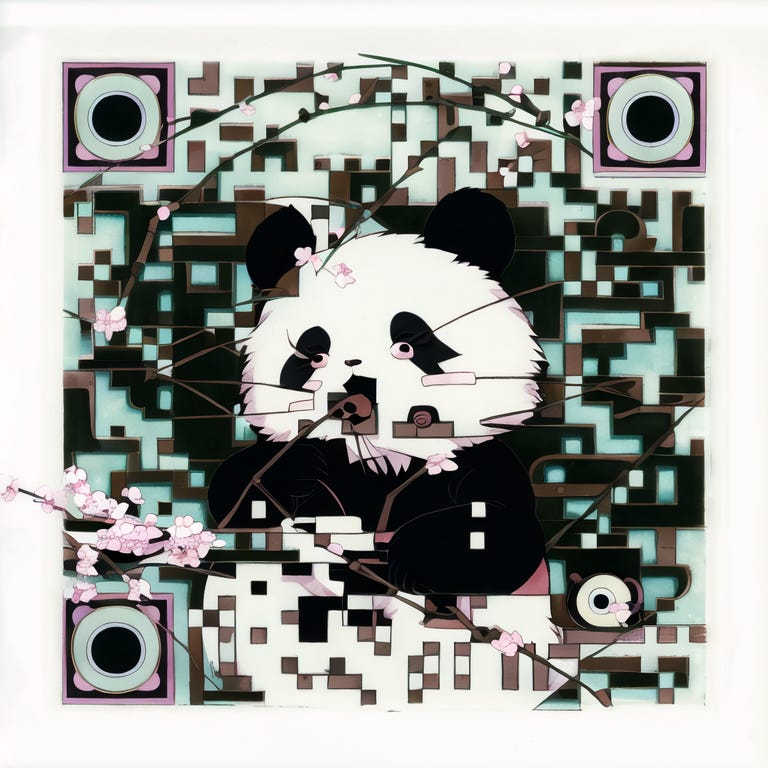

ControlNet QR Codes

Image diffusion models like Stable Diffusion can generate all types of images — from photorealistic quality to stylized images (e.g., in the style of Studio Ghibli or Leonardo Da Vinci).

We started to control the output of these models with prompt engineering — finding the perfect combination of tokens to mix and match to achieve great images. Then we started curating the dataset — e.g., fine-tuning with images taken with a DSLR camera. Low-rank adaptation (LoRA).

ControlNet is another model for controlling Stable Diffusion models via extra conditions. The conditions can be an outline, the pose of a subject, a depth map, or any ControlNet you train yourself (the model trains quickly and doesn’t require many training samples — as few as 1,000 can work).

ControlNet solves the “draw the owl” meme. (“How to draw an owl. 1. Draw some circles. 2. Draw the rest of the fucking owl”).

It’s still more of an art than a science, but it’s already proven to have interesting results.

The author of the QR code ControlNet trained their own ControlNet model, but I’ve been experimenting with using some off-the-shelf models and some QR code generation hacks. Still, a lot of work to be done, but try scanning these QR codes with your phone (they should work!)